Monitoring Challenges

We access Cassandra through our standard NoSQL proxy layer Apollo, which gives us great application level performance and availability metrics for free via our uwsgi metrics framework. This immediately gives us the capability to alert developers when their Cassandra based application is slow or unavailable. Couple these pageable events with reporting the out of the box JMX metrics on query timing to customers and the monitoring story is starting to look pretty good for consumers of Cassandra.The main difficulty we faced was monitoring the state of the entire database for operators of Cassandra. Some of the metrics exposed over JMX are useful to operators. There are a lot of good resources online to learn which JMX metrics are most relevant to operators, so I won’t cover them here. Unfortunately, most of the advanced cluster state monitoring is built into

nodetool

which is useful for an operator tending to a specific cluster but does not

scale well to multiple clusters with a distributed DevOps ownership model where

teams are responsible for their own clusters. For example, how would one

robustly integrate the output of nodetool with

Nagios or Sensu?

OpsCenter is

closer to what we need, especially if you pay for the enterprise edition, but

the reality is that this option is expensive, does not monitor ring health in

the way we want and does not (yet) support 2.2 clusters.We need to be able to determine a datastore is going to fail before it fails. Good datastores have warning signs, but it’s a matter of identifying them. In our experience, the JMX metrics monitoring technique works well when you’re having a performance or availability problem isolated to one or two nodes. The technique falls flat, however, when trying to differentiate between an innocuous single node failure impacting a keyspace with a replication factor of five and a potentially critical single node failure impacting a keyspace with a replication factor of two.

Finding Cassandra’s Warning Signs

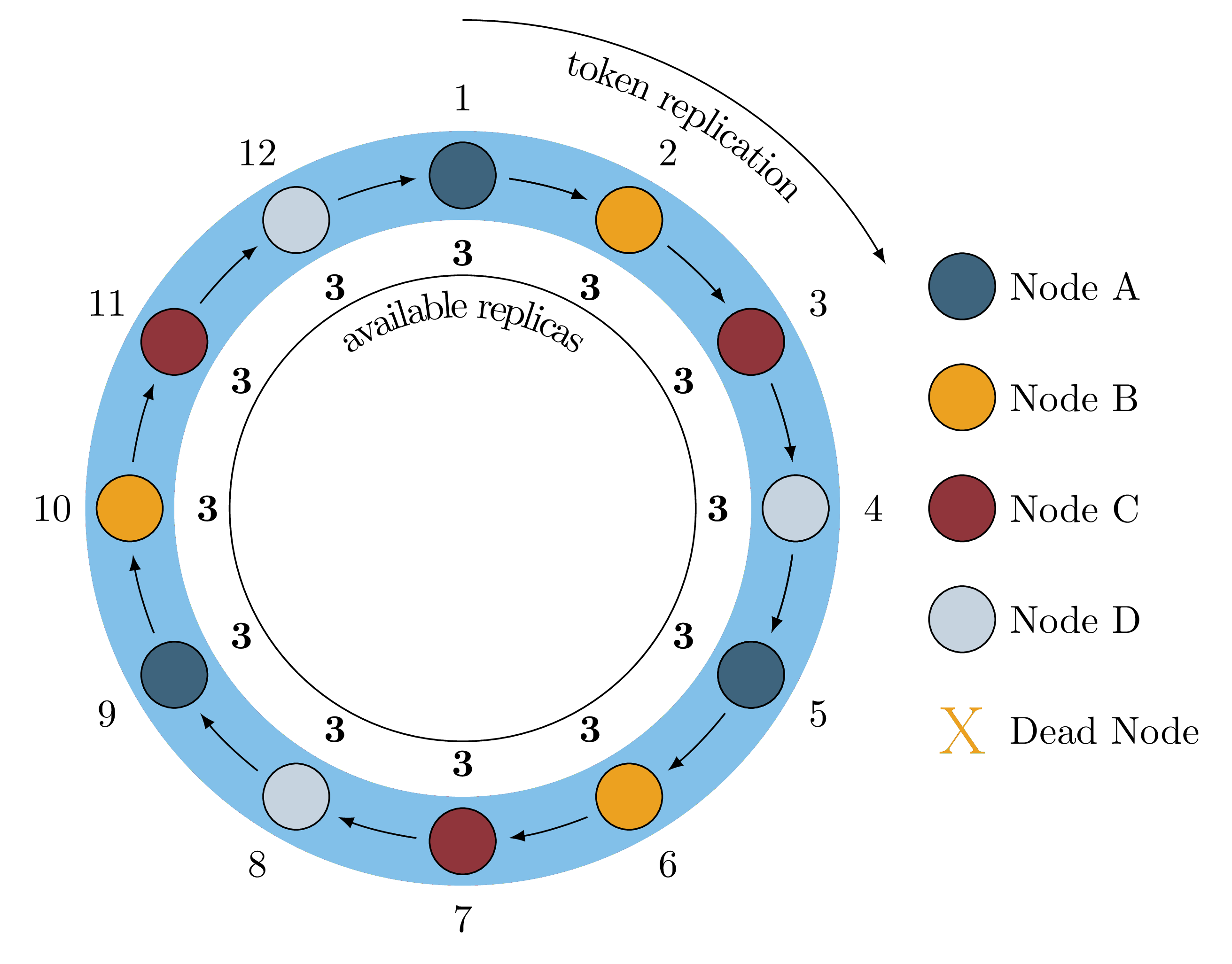

Cassandra uses a ring topology to store data. This topology divides the database into contiguous ranges and assigns each of the ranges to a set of nodes, called replicas. Consumers query the datastore with an associated consistency level which indicates to Cassandra how many of these replicas must participate when answering a query. For example, a keyspace might have a replication factor of 3, which means that every piece of data is replicated to three nodes. When a query is issued atLOCAL_QUORUM, we need to contact at least ⅔ of the

replicas. If a single host in the cluster is down, the cluster can still

satisfy operations, but if two nodes fail some ranges of the ring will become

unavailable.Figure 1 is a basic visualization of how data is mapped onto a single Cassandra ring with virtual nodes. The figure elides details of datacenter and rack awareness, and does not illustrate the typically much higher number of tokens than nodes. However, it is sufficient to explain our monitoring approach.

Figure 1: A Healthy Ring

Node A, Node B

and Node C. When all physical hosts are healthy, all token ranges have all

three replicas available, indicated by the threes on the inside of the ring.

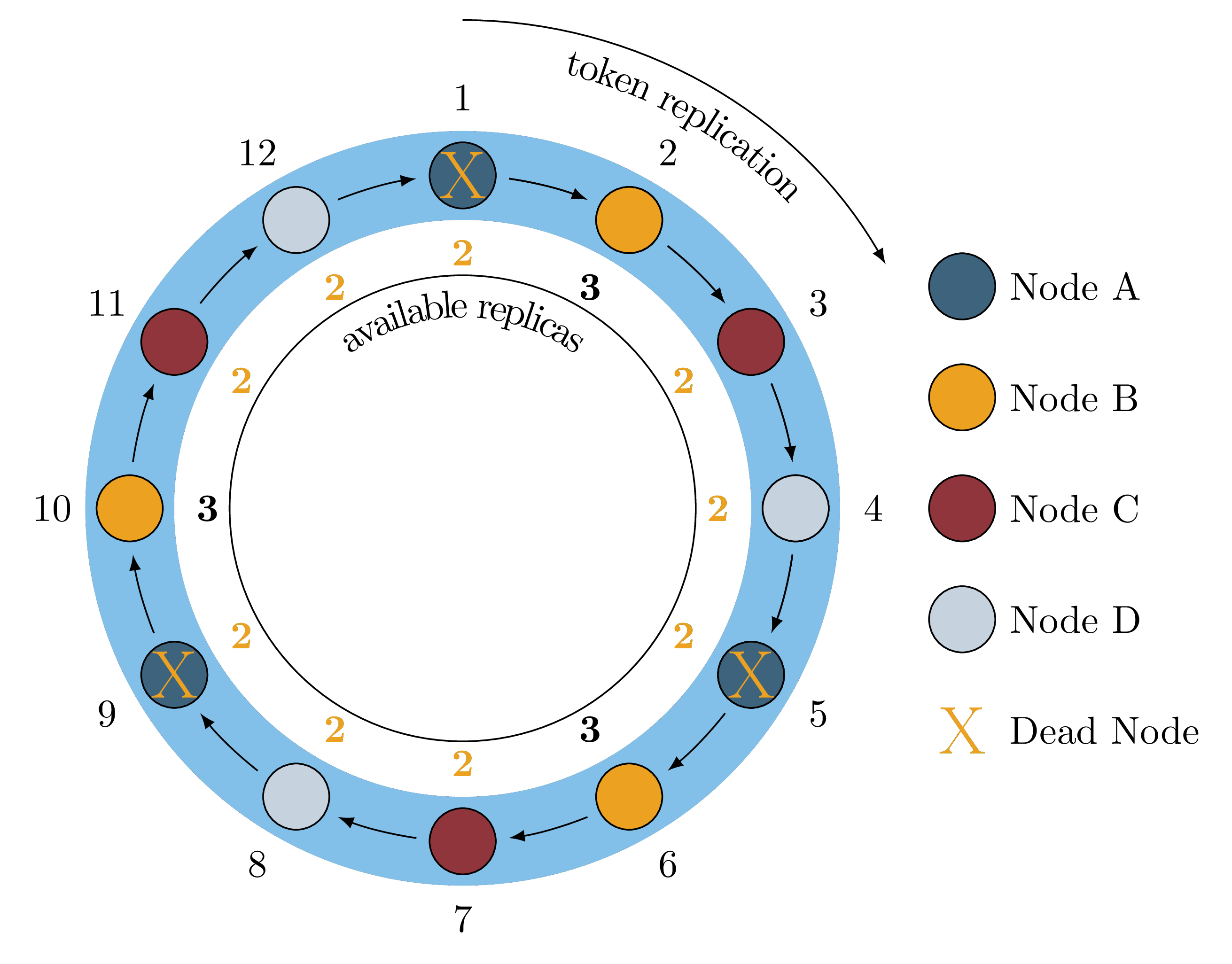

When a single node fails, say Node A, we lose a replica of nine

token ranges because any token range that would replicate to Node A is

impacted. For example, a key that would map to token range 8 would typically

replicate to Node D, Node A and Node B but it cannot replicate to to

Node A because Node A is down. This is illustrated in Figure 2.

Figure 2: Single Node Failure

LOCAL_QUORUM because ⅔ of

replicas are still available for all token ranges, but if we were to lose

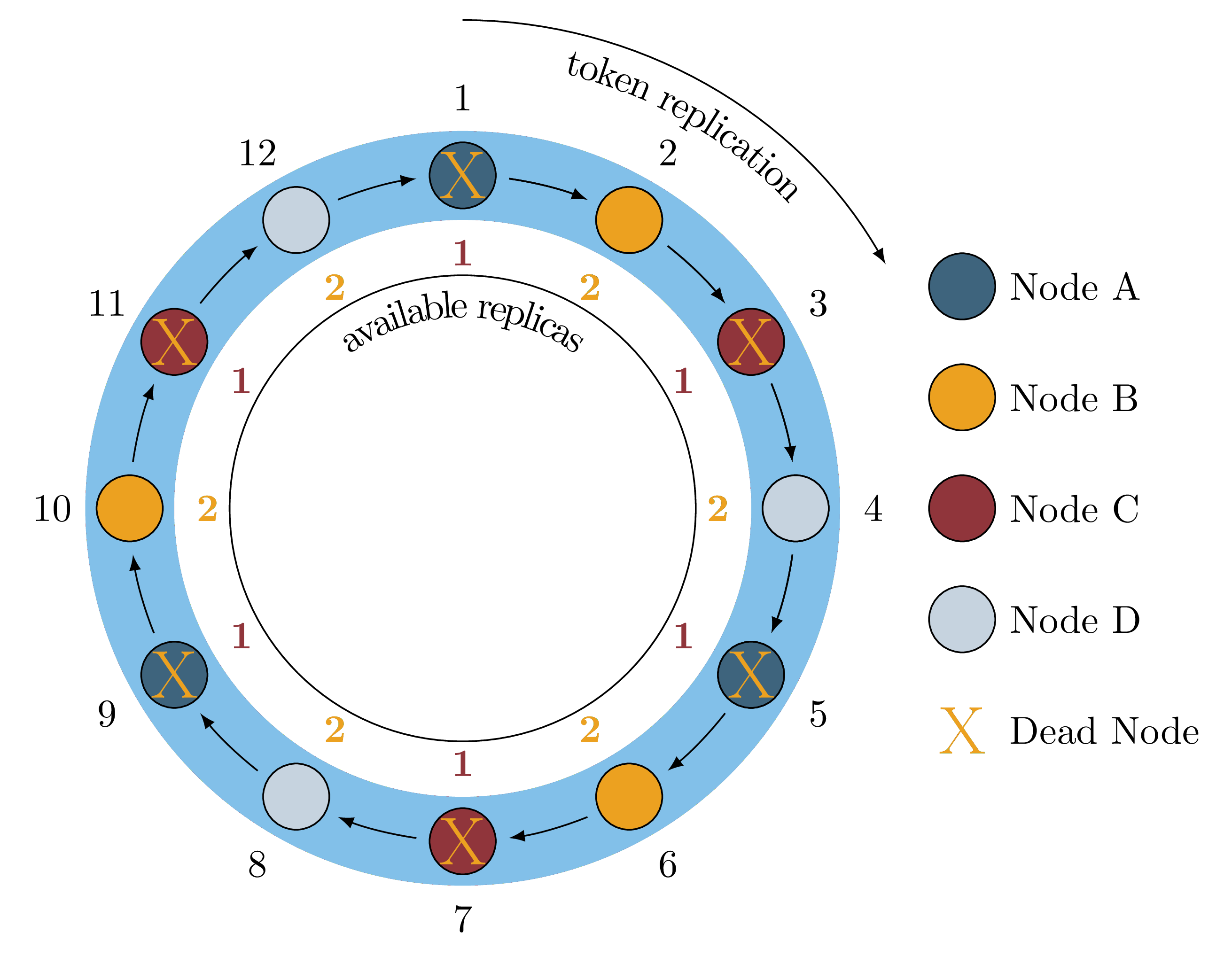

another node, say Node C, we would lose a second replica of six token ranges

as shown in Figure 3.

Figure 3: Two Node Failures

LOCAL_QUORUM, while any keys not in those ranges are still available.This understanding allows us to check if a cluster is unavailable for a particular consistency level of operations by inspecting the ring and verifying that all ranges have enough nodes in the “UP” state. It is important to note that client side metrics are not sufficient to tell if a single additional node failure will prevent queries from completing, because the client operations are binary: they either succeed or not. We can tell they are failing, but can’t see the warning signs before failure.

Monitoring Cassandra’s Warning Signs

Under the hood,nodetool uses a JMX interface to retrieve information like

ring topology, so with some sleuthing in the nodetool and Cassandra source

code we can find the following useful

mbeans:- type=StorageService/getRangeToEndpointMap/{keyspace}

- type=EndpointSnitchInfo/getDatacenter/{node}

- type=StorageService/Keyspaces

- type=StorageService/LiveNodes

LOCAL_ONE, which

means that we can get robust monitoring by deploying the above check twice: the

first monitoring LOCAL_ONE that pages operators, and the second monitoring

LOCAL_QUORUM that cuts a ticket on operators. When running with a replication

factor of three, this allows us to lose one node without alerting anyone,

ticket after losing two (imminently unavailable), and page upon losing all

replicas (unavailable).This approach is very flexible because we can find the highest consistency level of any operation against a given cluster and then tailor our monitoring to check the cluster at the appropriate consistency level. For example, if the application does reads and writes at quorum we would ticket after

LOCAL_ALL

and page on LOCAL_QUORUM. At Yelp, any cluster owner can control these

alerting thresholds individually.A Working Example

The real solution has to be slightly more complicated because of cross-datacenter replication and flexible consistency levels. To demo this approach really works, we can setup a three node Cassandra cluster with two keyspaces, one with a replication factor of one (blog_1) and one with a replication factor of three (blog_3). In this configuration each node has the default 256 vnodes.An important thing to understand is that this script probably won’t “just work” in your infrastructure, especially if you are not using jolokia, but it may be useful as a template for writing your own robust monitoring.

Take it to 11, What’s Next?

Our SREs can sleep better at night knowing that our Cassandra clusters will give us warning before they bring down the website, but at Yelp we always ask, “what’s next?”Once we can reliably monitor ring health, we can can use this capability to further automate our Cassandra clusters. For example, we’ve already used this monitoring strategy to enable robust rolling restarts that ensures ring health at every step of the restart. A project we’re currently working on is combining this information with autoscaling events to be able to intelligently react to hardware failure in an automated fashion. Another logical next step is to automatically deduce the consistency levels to monitor by hooking into our Apollo proxy layer and updating our monitoring from the live stream of queries against keyspaces. This way if we change the queries to a different consistency level, the monitoring follows along.

Furthermore, if this approach proves useful long term, it is fairly easy to integrate it into

nodetool directly, e.g.

nodetool health <keyspace> <consistency_level>.| replication_level = input(...) |

| alive_nodes = getLiveNodes(...) |

| keyspaces = getKeyspaces(...) - system_keyspaces() |

| underreplicated_keyspaces = {} |

| for keyspace in keyspace: |

| keyspace_ranges = getRangeToEndpointMap(keyspace) |

| for _, range_nodes in keyspace_ranges: |

| if |range_nodes ∩ alive_nodes| < replication_level: |

| underreplicated_keyspaces[keyspace] = True |

| if underreplicated_keyspaces: |

| alert_cluster_operator(underreplicated_keyspaces) |

| cqlsh:blog_1> DESCRIBE CLUSTER |

| Cluster: test |

| Partitioner: Murmur3Partitioner |

| Range ownership: |

| 3732272507813644288 [NODE_A] |

| 2788686370127196454 [NODE_B] |

| -7530935857429381116 [NODE_B] |

| 111150679707998215 [NODE_B] |

| 3524081553196673032 [NODE_A] |

| 2388757566836860943 [NODE_B] |

| 6174693015045179395 [NODE_A] |

| 81565236350140436 [NODE_B] |

| 9067682831513077639 [NODE_C] |

| 3254554184914284573 [NODE_B] |

| 8220009980887493637 [NODE_C] |

| ... |

| cqlsh:blog_1> use blog_3 ; |

| cqlsh:blog_3> DESCRIBE CLUSTER |

| Cluster: test |

| Partitioner: Murmur3Partitioner |

| Range ownership: |

| 3732272507813644288 [NODE_A, NODE_B, NODE_C] |

| 2788686370127196454 [NODE_B, NODE_C, NODE_A] |

| -7530935857429381116 [NODE_B, NODE_C, NODE_A] |

| 111150679707998215 [NODE_B, NODE_C, NODE_A] |

| 3524081553196673032 [NODE_A, NODE_B, NODE_C] |

| 2388757566836860943 [NODE_B, NODE_C, NODE_A] |

| 6174693015045179395 [NODE_A, NODE_B, NODE_C] |

| ... |

| # All nodes are running normally |

| # Check availability of keyspaces |

| (venv)$ ./check_cassandra_cluster --host NODE_A all |

| OK: cluster looks fine |

| (venv)$ ./check_cassandra_cluster --host NODE_A local_quorum |

| OK: cluster looks fine |

| # Stop NODE_A |

| # ... |

| # Check availability of keyspaces |

| (venv)$ ./check_cassandra_cluster --host NODE_B all |

| CRITICAL: cluster cannot complete operations at consistency level all. |

| [Underreplicated partitions: {u'blog_3': 768, u'blog_1': 256}] |

| (venv)$ ./check_cassandra_cluster --host NODE_B local_quorum |

| CRITICAL: cluster cannot complete operations at consistency level local_quorum. |

| [Underreplicated partitions: {u'blog_1': 256}] |

| (venv)$ ./check_cassandra_cluster --host NODE_B local_one |

| CRITICAL: cluster cannot complete operations at consistency level local_one. |

| [Underreplicated partitions: {u'blog_1': 256}] |

| # Stop NODE_B as well |

| # ... |

| # Check availability of keyspaces |

| (venv)$ ./check_cassandra_cluster --host NODE_C all |

| CRITICAL: cluster cannot complete operations at consistency level all. |

| [Underreplicated partitions: {u'blog_3': 768, u'blog_1': 512}] |

| (venv)$ ./check_cassandra_cluster --host NODE_C local_quorum |

| CRITICAL: cluster cannot complete operations at consistency level local_quorum. |

| [Underreplicated partitions: {u'blog_3': 768, u'blog_1': 512}] |

| (venv)$ ./check_cassandra_cluster --host NODE_C local_one |

| CRITICAL: cluster cannot complete operations at consistency level local_one. |

| [Underreplicated partitions: {u'blog_1': 512}] |

No comments:

Post a Comment